NVIDIA Introduces Chip Linking Technology

Nvidia unveils NVLink Fusion at Computex 2025, enabling ultra-fast, modular AI chip communication between GPUs, CPUs, and accelerators.

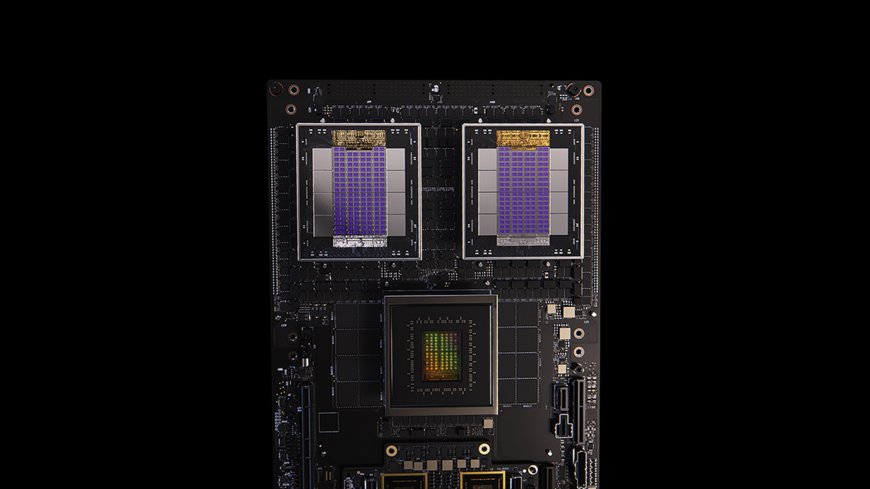

At Computex 2025, Nvidia introduced NVLink Fusion, a next-generation chip-to-chip interconnect designed to power the future of artificial intelligence. This new technology enables ultra-fast data sharing between Nvidia GPUs and third-party processors—opening the door to a modular and customizable AI hardware ecosystem.

What is NVLink Fusion?

Building on its legacy NVLink protocol, NVLink Fusion allows for up to 800 GB/s bandwidth, dramatically reducing latency and enabling real-time communication across heterogeneous computing systems. This isn’t just about connecting Nvidia’s own chips—it’s about forming open bridges between GPUs, CPUs, and custom AI accelerators.

According to Business Today, this innovation is expected to transform AI workloads, especially in data centers and large-scale cloud environments.

A Cross-Industry Collaboration

Nvidia is inviting partners across the semiconductor industry to adopt and integrate NVLink Fusion. Companies like Fujitsu, Qualcomm, Marvell, and MediaTek are reportedly exploring or already working on incorporating the technology into their products. Design tool vendors like Synopsys and Cadence are also supporting it with EDA tools.

This strategy reflects Nvidia's shift toward a cooperative AI ecosystem, where performance isn't limited by brand silos. You can read more about this collaborative push from The Indian Express, which also covered broader AI trends shaping the industry.

Key Technical Benefits

Here’s what makes NVLink Fusion such a breakthrough:

-

Chiplet-based architecture: Allows third-party CPUs or accelerators to integrate directly with Nvidia GPUs.

-

High-speed data transfers: With 800 GB/s speeds, it significantly outperforms traditional PCIe lanes.

-

Scalability: Supports scale-up and scale-out system designs.

-

Low latency: Ideal for real-time AI training, inference, and simulation workloads.

You can also check Nvidia’s official update on NVLink Fusion and related announcements from Computex via ASUS’s press release, which highlighted future-ready AI and gaming integrations.

Why It Matters

By decoupling AI system design from closed architectures, Nvidia is democratizing AI compute infrastructure. This has far-reaching implications for industries building:

-

Custom AI accelerators

-

Cloud-native inference platforms

-

Advanced robotics and edge devices

More than a hardware upgrade, NVLink Fusion is a strategic enabler—unlocking performance, flexibility, and collaboration at a time when AI workloads are exploding in scale and complexity.

The Bottom Line

NVLink Fusion is poised to become a cornerstone of the next generation of AI systems. As Nvidia opens its ecosystem to more chipmakers and tool providers, we’re likely to see a new wave of innovation in high-performance computing.

Utej

Utej